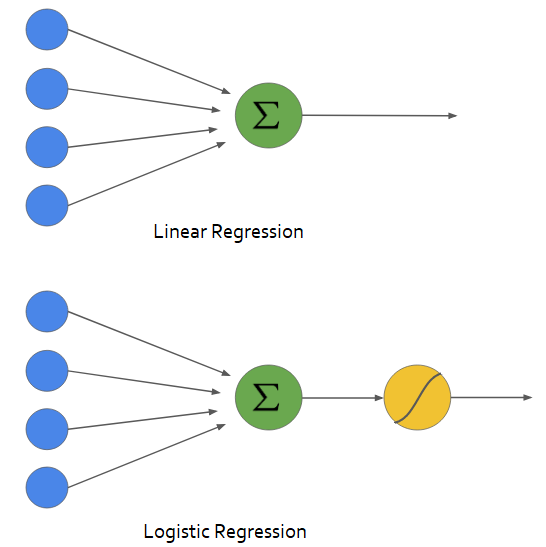

F₂ αf ₁ β. The book is a showcase of logistic regression theory and application of statistical machine learning with Python.

Building A Logistic Regression In Python

Multicollinearity could result in significant problems during model fitting.

Multicollinearity in logistic regression python. We will use statsmodels sklearn seaborn and bioinfokit v104 or later Follow complete python code for cancer prediction using Logistic regression. The multicollinearity diagnostics you get from the REGRESSION procedure have NOTHING to do with the dependent variable and EVERYTHING to do with the relationships among the explanatory variables. Screening multicollinearity in a regression model.

This multicollinearity occurs in both regression and classification. In reality shouldnt you re-calculated the VIF after every time you drop a feature. We will also go through the measures of Multicollinearity.

This was directly from Wikipedia. A section about contingency tables is also provided. Clustered standard errors in statsmodel with multicolinearity problem.

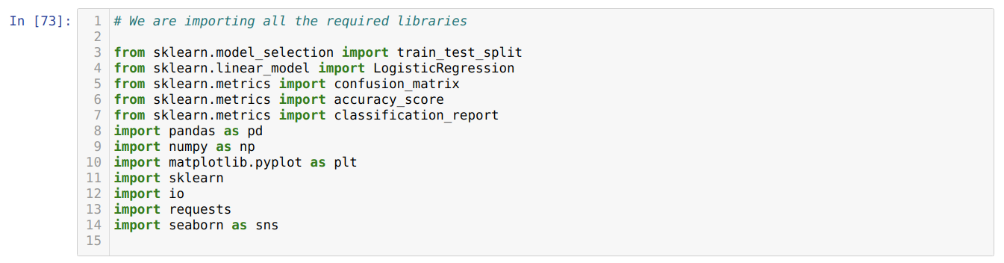

Here are the imports you will need to run to follow along as I code through our Python logistic regression model. If you have your own dataset you should import it as pandas dataframe. The initial model can be considered as the base model.

Logistic regression in python. Multicollinearity corresponds to a situation where the data contain highly correlated independent variables. Which is obvious since total_pymnt total_rec_prncp total_rec_int.

I am using a method described by Paul Allison in his book Logistic. This is a problem because it reduces the precision of the estimated coefficients which weakens the statistical power of. Detecting highly correlated attributes.

As defined by WallStreetMojo. If you include an interaction term the product of two independent variables you can also reduce multicollinearity by centering the variables. Regressors are orthogonal when there is no linear relationship between them.

Actually it occurs in data not in the model. Import pandas as pd import numpy as np import matplotlibpyplot as plt matplotlib inline import seaborn as sns. In this post well build a logistic regres s ion model on a classification dataset called breast_cancer data.

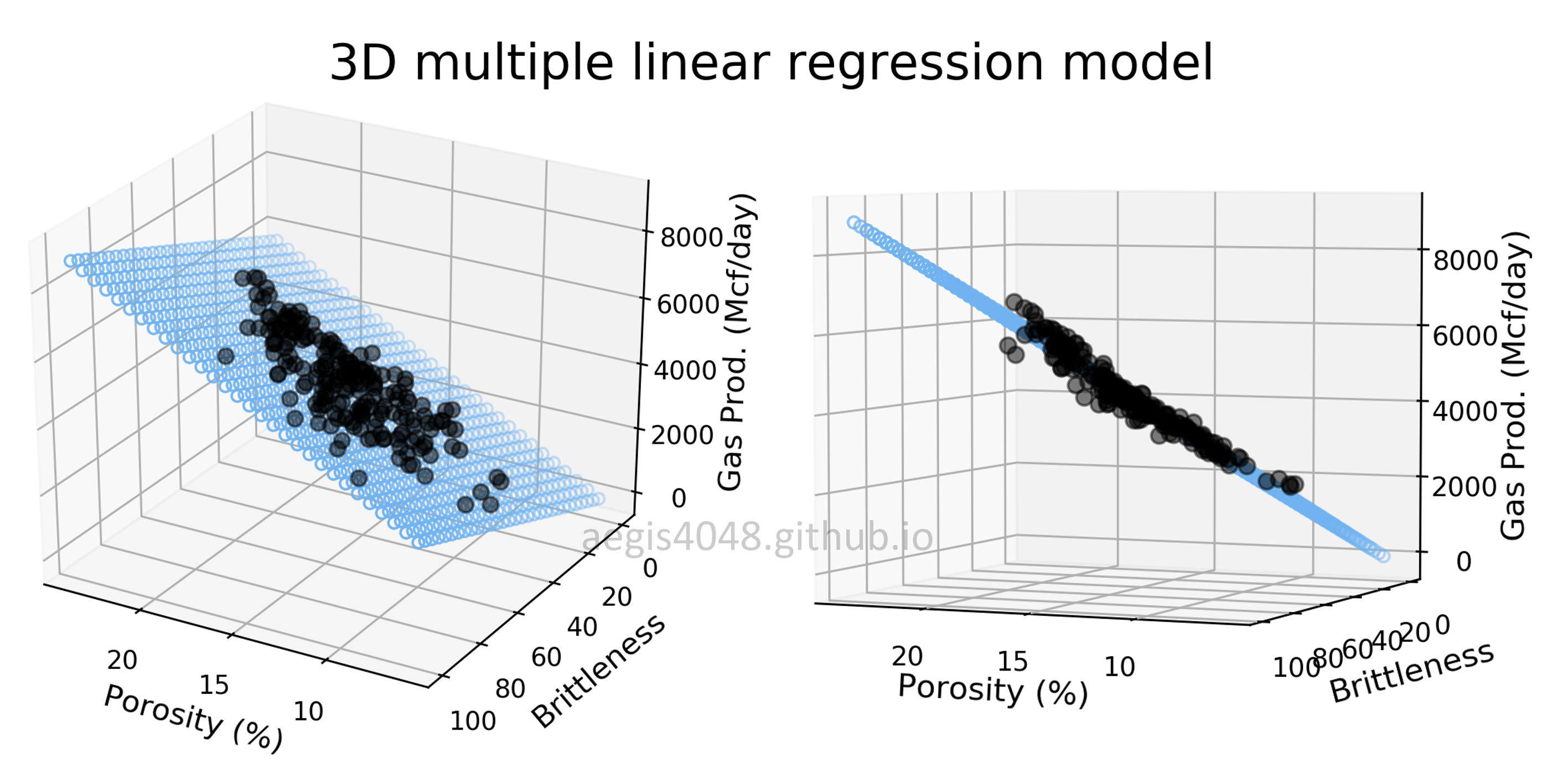

Then well apply PCA on breast_cancer data and build the logistic regression model again. Multicollinearity refers to a situation in which two or more explanatory variables in a multiple regression model are highly linearly related. I am also testing for multicollinearity using logistic regression.

The predicted variable and the IV s are the variables that are believed to have an influence on the outcome aka. Assumption 4 Absence of Multicollinearity. In other words if two features are f1 and f2 and they can be written in a form.

In previous post of this series we looked into the issues with Multiple Regression models. Unfortunately linear dependencies frequently exist in real life data which is referred to as multicollinearity. The DV is the outcome variable aka.

It is a situation when 2 or more variables give the same data to predict the class label or 2 or more variables are highly correlated in the data. Next we will need to import the Titanic data set into our Python script. The process of the logistic regression in python is given below.

After that well compare the performance between the base model. Height and Height2 are faced with problem of multicollinearity. This python file helps you understand and implement removal of multi-collinearity using python.

Well be using the digits dataset in the scikit learn library to predict digit values from images using the logistic regression model in Python. Checking for Multicollinearity in Python. Linear regression in R and Python - Different results at same problem.

For example multicollinearity between regressors may result in large variances. Initialize the logistic regression. As we can see that total_pymnt total_rec_prncp total_rec_int have VIF5 Extreme multicollinearity.

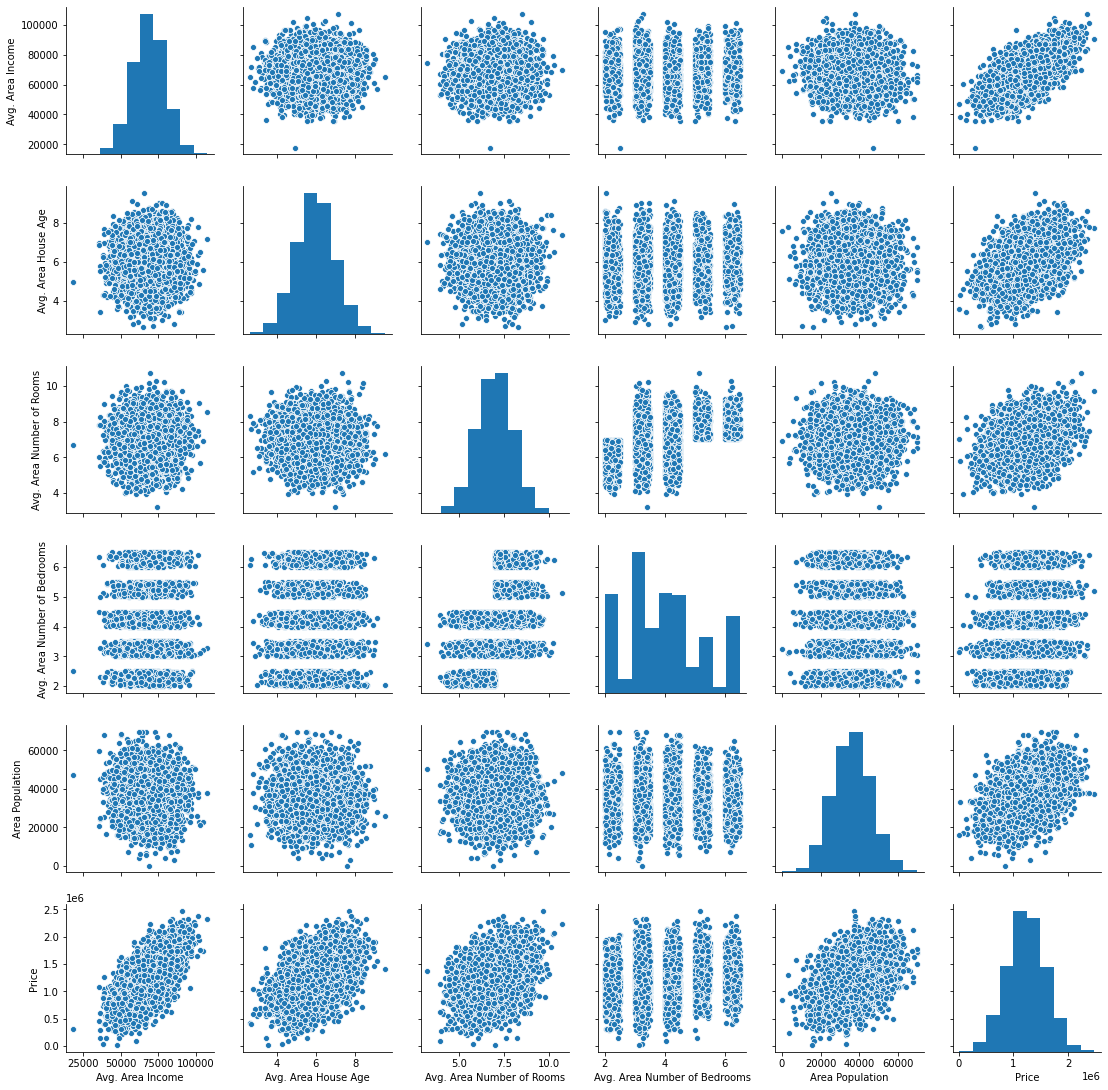

Method 1 --- Using Correlation Plot. I have all outcomes and predictors as categorical variables. In my example youd dropb both A and C but if you calculate VIF C after A is dropped is not going to be 5endgroup.

Multicollinearity is a state where two or more features of the dataset are highly correlated. Method 2 --- Using Varaince Influence Factor. In laymans terms its when independent.

In this part we will understand what Multicollinearity is and how its bad for the model. 20419 Issue of Multicollinearity in Python. Import all the required packages for the logistic regression and other libraries.

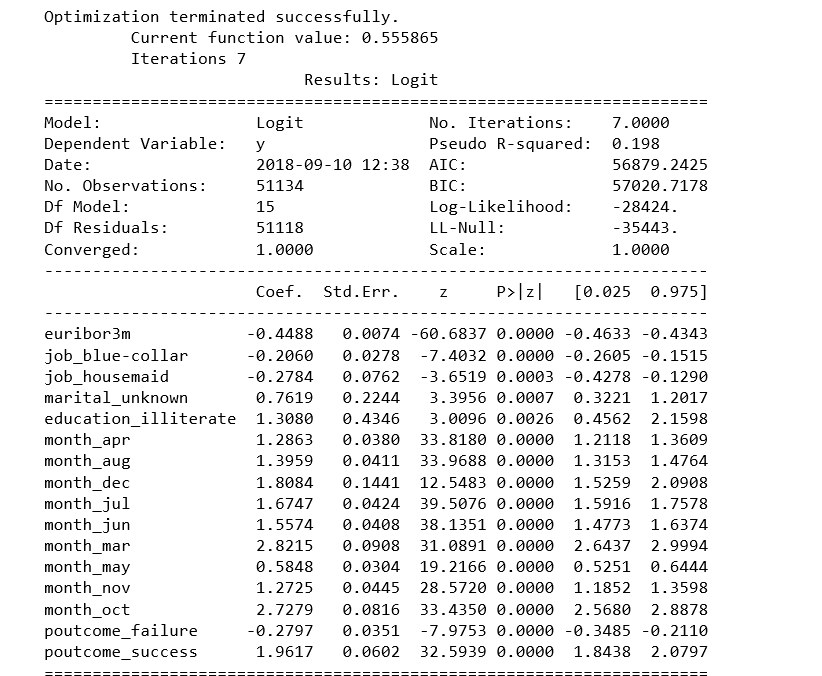

Therefore PCA can effectively eliminate multicollinearity between features. Understand the independent dataset variables and dependent variables. Topics include logit probit and complimentary log-log models with a binary target as well as multinomial regression.

To reduce multicollinearity lets remove the column with the highest VIF and check the results. If you loop over the features A and C will have VIF 5 hence they will be dropped. Predict the Digits in Images Using a Logistic Regression Classifier in Python.

Multicollinearity is a statistical phenomenon in which two or more variables in a regression model are dependent upon the other variables in such a way that one can be linearly predicted from the other with a high degree of accuracy. A is correlated with C. Lets now jump into understanding the logistics Regression algorithm in Python.

OR can be obtained by exponentiating the coefficients of regressions. Hope this helps you to build better and reliable Linear and Logistic regression models. Logistic regression models are used to analyze the relationship between a dependent variable DV and independent variable s IV when the DV is dichotomous.

Split the dataset into training and test data. By centering it means subtracting the mean from the independent variables values before creating the products. So what is multicollinearity.

Logistic Regression An Applied Approach Using Python. Detecting Multicollinearity with VIF Python Last Updated. 29 Aug 2020 Multicollinearity occurs when there are two or more independent variables in a multiple regression model which have a high correlation among themselves.

Logistic Regression Assumptions And Diagnostics In R Articles Sthda

Multicollinearity In Regression Analysis Problems Detection And Solutions Statistics By Jim

204 2 5 Multicollinearity And Individual Impact Of Variables In Logistic Regression Statinfer

Logistic Regression There Comes A Time Where You Are Given By Zaki Jefferson Analytics Vidhya Medium

Logistic Regression Multicollinearity Part 6 Youtube

Logistic Regression There Comes A Time Where You Are Given By Zaki Jefferson Analytics Vidhya Medium

204 2 5 Multicollinearity And Individual Impact Of Variables In Logistic Regression Statinfer

Logistic Regression Assumptions And Diagnostics In R Articles Sthda

Logistic Regression Multicollinearity Part 6 Youtube

Building A Logistic Regression In Python By Animesh Agarwal Towards Data Science

Multiple Linear Regression And Visualization In Python Pythonic Excursions

Detect And Treat Multicollinearity In Regression With Python Datasklr

Removing Multicollinearity For Linear And Logistic Regression By Princeton Baretto Analytics Vidhya Medium

How To Build And Train Linear And Logistic Regression Ml Models In Python

Feature Importance For Breast Cancer Random Forests Vs Logistic Regression Cross Validated

Multicollinearity In Regression Analysis Regression Analysis Regression Analysis

إرسال تعليق